There are other examples out there using the publicly-available OpenAI service, but what if you’re concerned about “pulling a Samsung” and inadvertently sending your company’s IP to the public service when trying to triage a bug report? A simple error message can accidentally provide context about what you’re working on, such as project names, file types, method names, and any other context you intend to use in your prompt.

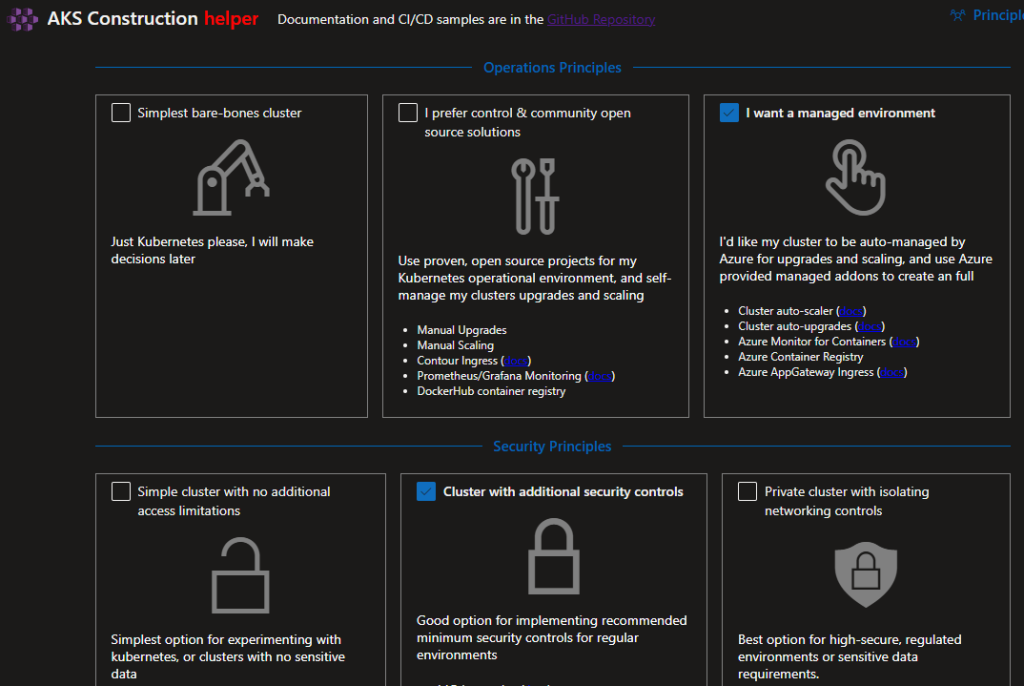

OpenAI is an AI research lab and company known for their advanced language model GPT-3, while the Azure OpenAI Service is a specific offering within Microsoft Azure that integrates OpenAI’s technologies into the Azure platform, allowing developers to leverage their powerful AI models at scale. Azure OpenAI (AOAI) also helps keep your company’s data in your own Azure tenant.

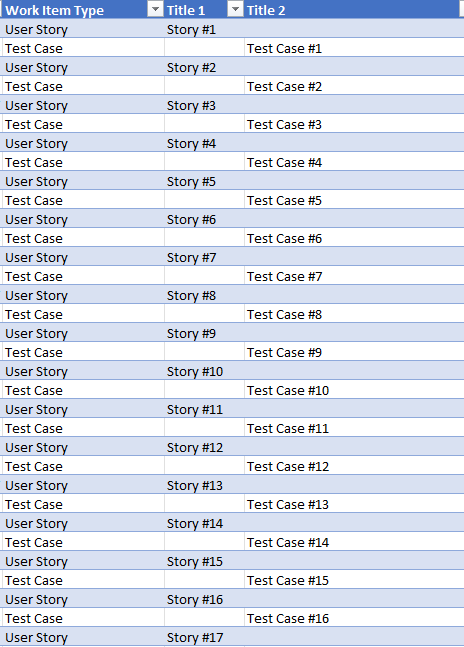

In my little sample implementation, I used AOAI, a Logic App, and a small Azure Function to put together an “automatic AI bug triage” service hooked up to Azure DevOps. This approach provides a simple way to send problem information to OpenAI for the sake of solving a bug, without training the public OpenAI model.

First, get yourself access to the Azure OpenAI service by requesting it here.

Once you have your service provisioned, you’ll be ready to connect to it from your Azure Function (more on that shortly).

Azure Logic App

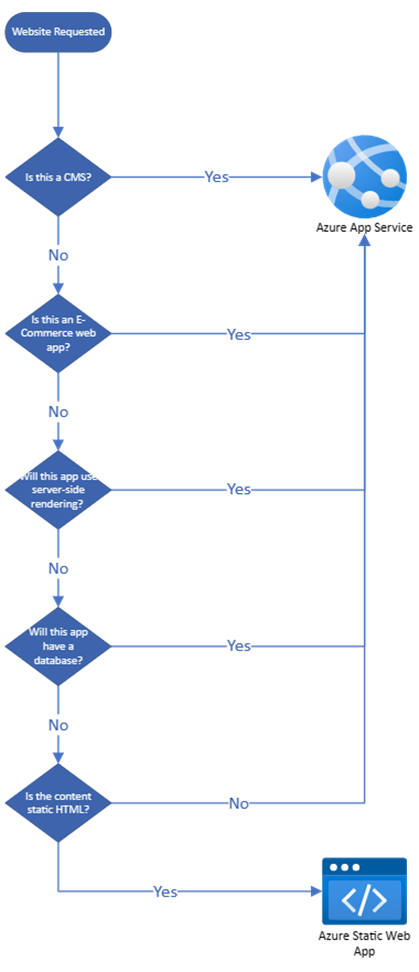

The scaffolding for this triage service is the Azure Logic App, which helps orchestrate the capturing of the bug submission, calling the Function, and putting the results back into the bug in Azure DevOps.

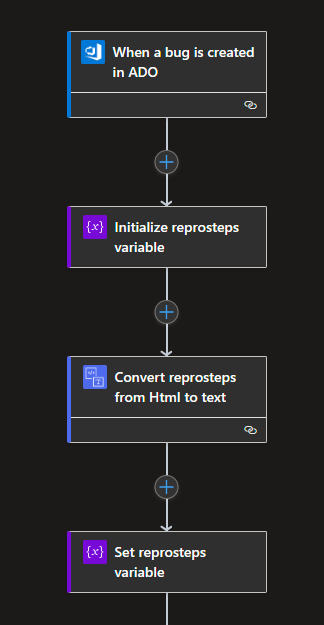

Below, you see the first steps of the Logic App. It’s triggered when a bug is created in Azure DevOps (you must specify your org and project for scope, an provide other conditions to trigger if you like), capture the value from the “Repro Steps” field. The value is then “scrubbed” to plain text.

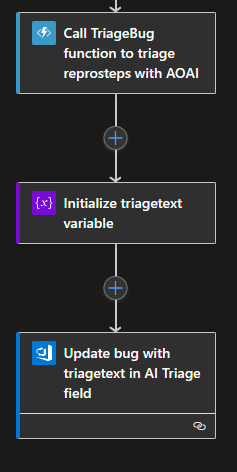

Next, we call the TriageBug Azure Function and pass it the value of the ‘reprostep’s variable. After capturing the response from the Function in a variable ‘triagetext’, that value is updated into the original bug (work item). In this example, I put it in a custom “AI Triage” field.

Azure Function to Call Azure Open AI

Now, I could have skipped using a Function and just called the AOAI service directly via its REST endpoint, but why not modularize that a little, providing more development control over prompting (or prompt chaining) to hopefully a more accurate response from the service.

In my Function I used the Microsoft Semantic Kernel, which is a lightweight SDK which provides easy access to AOAI. An easy method to get an IKernal object:

private static IKernel GetSemanticKernel()

{

var kernel = Kernel.Builder.Build();

kernel.Config.AddAzureTextCompletionService(

_OpenAIDeploymentName,

_OpenAIEndpoint,

_OpenAIKey

);

return kernel;

}You can, for example purposes, hard code the above ‘_OpenAIDeploymentName’, ‘_OpenAIEndpoint’, and ‘_OpenAIKey’ variables, but it’s recommended you pull those values from Key Vault.

I create a quick static method to call AOAI. I hard-coded the prompt to send to the service, but you could easily extend it to pull a prompt from somewhere else:

static async Task<string> AskForHelp(string msg)

{

IKernel kernel = GetSemanticKernel();

var prompt = @"As a software developer, identify potential causes, fixes, and recommendations

for the following error message (include references as well): {{$input}}";

var fnc = kernel.CreateSemanticFunction(prompt);

var result = await fnc.InvokeAsync(msg);

return result.ToString();

}The Function trigger was only a small edit from the base code created when using an HTTP trigger:

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("TriageBug function processed a request.");

string message = req.Query["message"];

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

message ??= data?.message;

string responseMessage = await AskForHelp(message);

return new OkObjectResult(responseMessage.Trim());

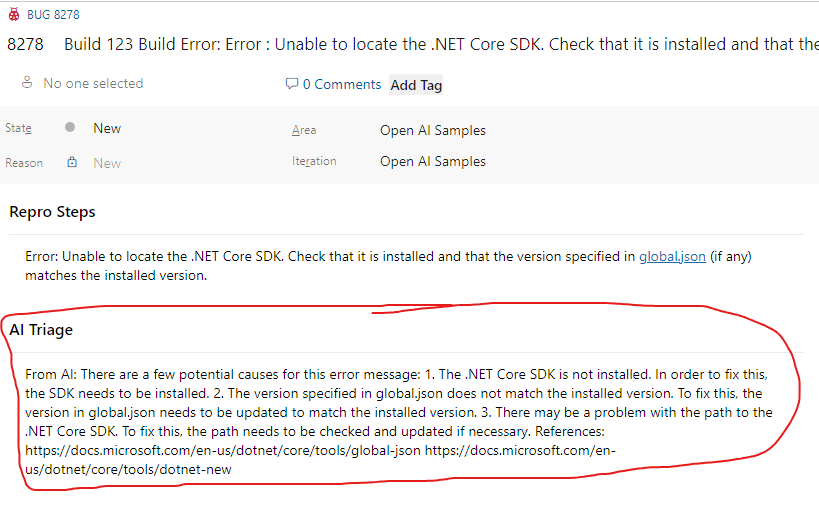

}If I have the Logic App enabled and file a bug in ADO, the Logic App runs and I see something like this:

So there you go!

Is it perfect? Nope. There are plenty of things you can adjust/add here to make this more robust, such as:

- Use more AI to “learn” what best prompt to use (based on extracted text) to get more accurate results.

- Return the triage text as HTML to enable hyperlinks and better formatting.

- Uh.. maybe a little error handling? 😀

- Use more values from ADO (additional bug field values, project name, description, etc) to provide better context for the prompt.

I’m sure there are more. Hopefully this gets you started!